Our research program focuses on intraoperative imaging, computer vision, artificial intelligence, augmented reality, biophotonics, and translational research. The overall goal of our program is to bring novel technologies and algorithms from the benchtop to the bedside of patients. We are developing interdisciplinary approaches interfacing medical imaging, computer vision, edge computing, augmented reality, and medicine to solve challenging clinical problems.

Augmented Reality & Intraoperative Optical Imaging

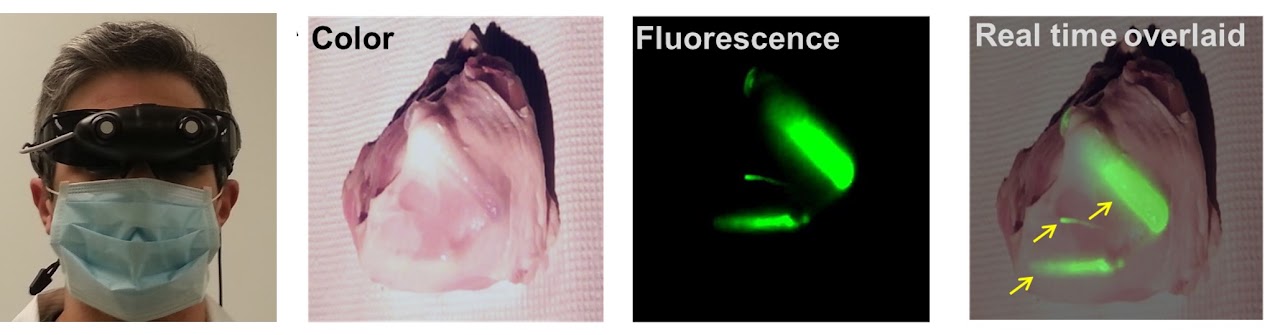

Traditional intraoperative imaging instruments display imageries on 2D monitors, which can distract the surgeon from focusing the patient and compromise hand-eye coordination. We have invented developed a wearable optical imaging and AR display system (i.e., Smart Goggle) for guiding surgeries. Real-time 3D fluorescence image guidance can be visualized in AR. In addition, multiscale imaging involves in vivo microscopy and multimodal imaging using ultrasound can also be integrated. Clinical translation of our systems in ongoing at multiple leading hospitals including Cleveland Clinic.

Real-time Intraoperative Optical Imaging and Multimodal Surgical Navigation

Surgeons need surgical navigation and intraoperative imaging for accurate surgery. However, current clinical surgical navigation systems have many drawbacks, including the need of fiducial markers, the lack of real-time registration update, and lack of functional imaging. We have developed a novel system that delivers simultaneous real-time multimodal optical imaging, CT-to-Optical image registration, and dynamic fiducial-free surgical navigation. The system integrated real-time intraoperative optical imaging and dynamic CT-based surgical navigation, offering complementary functional and structural information for surgical guidance. To the best of our knowledge, this is the first report of a system capable of concurrent intraoperative fluorescence imaging and dynamic, real-time, CT-based navigation, whereas multiple modalities are accurately registered.

Deep Learning and Medical Computer Vision

We have investigated and developed deep-learning-based methods for medical image segmentation and multimodal image registration. Automatic spine segmentation was enabled. In addition, we have developed a novel method for multimodal optical image registration.

Wearable Thermal-Color Dual Modal Imaging System for COVID-19 Temperature Screening

The ongoing COVID-19 pandemic has devastated communities and disrupted life worldwide. Thermal imaging systems are beneficial for temperature screening as the operator of the thermal imaging system is not required to be physically close to the subjects, in contrast to the contact thermography approach. One of the greatest challenges of COVID-19 is effective screening of schools, universities, and military units, where there are large gatherings of population. For rapid and high-throughput temperature screening, a wearable system is advantageous as wearable systems can enable hands-free operation and allow the operator to move around freely. We recently developed a prototype wearable system capable of real-time thermal-color dual-modal imaging with 3D augmented reality capability. The portable nature and AR display can potentially enable on-the-go screening.

Translational Research

We are collaborating with surgeons from multiple leading hospitals to translate our systems into clinical studies under IRB. The technology we developed can be broadly applied to multiple surgical subspecialties and clinical use cases. We intend to bring our technologies from the benchtop to the bedside of patients.